Processes

12.08.2011

Processes, scrum, software, software development, Technology, Uncategorized

Scrum is a process framework that has been used to manage complex product development since the early 1990s. It’s a framework within which you can employ various processes and techniques for developing software products. The authors, Ken Schwaber and Jeff Sutherland, just relased an updated guide to implementing Scrum; you can get a copy of it by clicking here–> Scrum Guide 2011 ,or on the picture.

Scrum is a process framework that has been used to manage complex product development since the early 1990s. It’s a framework within which you can employ various processes and techniques for developing software products. The authors, Ken Schwaber and Jeff Sutherland, just relased an updated guide to implementing Scrum; you can get a copy of it by clicking here–> Scrum Guide 2011 ,or on the picture.

A good comparison of the differences between the 2010 version and the 2011 version was done by Charles Bradley and I recommend you go to his blog by clicking HERE to read it.

Steve Porter, of www.Scrum.org describes one aspect of Scrum – the Chickens and the Pigs – that is gone…

One particular change was arguably small and cosmetic, but it really has significance in my opinion. So much so, that I offered to write this brief article to explain why the change was made and how you can interpret these changes as you go about implementing them in your projects.

Every Scrum practitioner has heard the fable of the chicken and the pigs. I won’t recount it here, but it is an embedded part of Scrum lore. Ken Schwaber created the pigs and the chicken metaphor in the early days of Scrum and it has been used repeatedly to separate the people who are committed to the project from the people who are simply involved.

Over the years, the labels have generated their share of controversy. Some argue that the terms are harmful to the process because they are derogatory. Others say that the negative connotation conjures a power dynamic that drives negative behaviour. Either way, you won’t find any references to animals, barnyard or otherwise, in the new Scrum Guide.

Why was it removed? Ken and Jeff felt it was better to discuss accountability directly in the Scrum Guide, as opposed to through metaphor. However, I think I can provide some additional insight. I was present at some of the discussions that led to the 2011 update and many people, including me, found that the labels were being used in a way that does not contribute positively to a team’s ability to perform its core function.

Enjoy…r/Chuck

02.01.2010

data sharing, Open Government, privacy, Processes, security, transparency

Following up on my comments and thoughts about the Open Government Directive and Data.gov effort, i just posted five ideas on the “Evolving Data.gov with You“ website and thought i would cross-post them on my blog as well…enjoy! r/Chuck

Following up on my comments and thoughts about the Open Government Directive and Data.gov effort, i just posted five ideas on the “Evolving Data.gov with You“ website and thought i would cross-post them on my blog as well…enjoy! r/Chuck

1. Funding – Data.gov cannot be another unfunded federal mandate

Federal agencies are already trying their best to respond to a stream of unfunded mandates. Requiring federal agencies to a) expose their raw data as a service and b) collect, analyze, and respond to public comments requires resources. The requirement to make data accessible to (through) Data.gov should be formally established as a component of one of the Federal strategic planning and performance management frameworks (GPRA, OMB PART, PMA) and each agency should be funded (resourced) to help ensure agency commitment towards the Data.gov effort. Without direct linkage to a planning framework and allocation of dedicated resources, success of Data.gov will vary considerably across the federal government.

2. Strategy – Revise CONOP to address the value to American citizens

As currently written, the CONOP only addresses internal activities (means) and doesn’t identify the outcomes (ends) that would result from successful implementation of Data.gov. In paragraph 1 the CONOP states “Data.gov is a flagship Administration initiative intended to allow the public to easily find, access, understand, and use data that are generated by the Federal government.”, yet there is no discussion about “what data” the “public” wants or needs to know about.

The examples given in the document are anecdotal at best and (in my opinion) do not reflect what the average citizen will want to see–all apologies to Aneesh Chopra and Vivek Kundra, but I do not believe (as they spoke in the December 8th webcast) that citizens really care much about things like average airline delay times, visa application wait times, or who visited the Whitehouse yesterday.

In paragraph 1.3 the CONOP states “An important value proposition of Data.gov is that it allows members of the public to leverage Federal data for robust discovery of information, knowledge and innovation,” yet these terms are not defined–what are they to mean to the average citizen (public)? I would suggest the Data.gov effort begin with a dialogue of the ‘public’ they envision using the data feeds on Data.gov; a few questions I would recommend they ask include:

- What issues about federal agency performance is important to them?

- What specific questions do they have about those issues?

- In what format(s) would they like to see the data?

I would also suggest stratifying the “public” into the different categories of potential users, for example:

- General taxpayer public, non-government employee

- Government employee seeking data to do their job

- Government agency with oversight responsibility

- Commercial/non-profit organization providing voluntary oversight

- Press, news media, blogs, and mash-ups using data to generate ‘buzz’

3. Key Partnerships – Engage Congress to participate in Data.gov

To some, Data.gov can be viewed as an end-run around the many congressional committees who have official responsibility for oversight of federal agency performance. Aside from general concepts of government transparency, Data.gov could (should) be a very valuable resource to our legislators.

Towards that end, I recommend that Data.gov open a dialogue with Congress to help ensure that Data.gov addresses the data needs of these oversight committees so that Senators and Congressmen alike can make better informed decisions that ultimately affect agency responsibilities, staffing, performance expectations, and funding.

4. Data Quality – Need process for assuring ‘good data’ on Data.gov

On Page 9 of the CONOP, the example of Forbes’ use of Federal data to develop the list of “America’s Safest Cities” brings to light a significant risk associated with providing ‘raw data’ for public consumption. As you are aware, much of the crime data used for that survey is drawn from the Uniformed Crime Reporting effort of the FBI.

As self-reported on the “Crime in the United States” website, “Figures used in this Report are submitted voluntarily by law enforcement agencies throughout the country. Individuals using these tabulations are cautioned against drawing conclusions by making direct comparisons between cities. Comparisons lead to simplistic and/or incomplete analyses that often create misleading perceptions adversely affecting communities and their residents.”

Because Data.gov seeks to make raw data available to a broad set of potential users; How will Data.gov address the issue of data quality within the feeds provided through Data.gov? Currently, federal agency Annual Performance Reports required under the Government Performance and Results Act (GPRA) of 1993 require some assurance of data accuracy of the data reported; will there be a similar process for federal agency data made accessible through Data.gov? If not, what measures will be put in-place to ensure that conclusions drawn from the Data.gov data sources reflect the risks associated with ‘raw’ data? And, how will we know that the data made available through Data.gov is accurate and up-to-date?

5. Measuring success of Data.gov – a suggested (simple) framework

The OMB Open Government Directive published on December 8, 2009 includes what are (in my opinion) some undefined terms and very unrealistic expectations and deadlines for federal agency compliance with the directive. It also did not include any method for assessing progress towards the spirit and intent of the stated objectives.

I would like to offer a simple framework that the Data.gov effort can use to work (collaboratively) with federal agencies to help achieve the objectives laid out in the directive. The framework includes the following five questions:

- Are we are clear about the performance questions that we want to answer with data to be made available from each of the contributing federal agencies?

- Have we identified the availability of the desired data and have we appropriately addressed security and privacy risks or concerns related to making that data available through Data.gov?

- Do we understand the burden (level of effort) required to make each of the desired data streams available through Data.gov and is the funding available (either internally or externally) to make the effort a success?

- Do we understand how the various data consumer groups (the ‘public’) will want to see or access the data and does the infrastructure exist to make the data available in the desired format?

- Do we (Data.gov and the federal agency involved) have a documented and agreed to strategy that prepares us to digest and respond to public feedback, ideas for innovation, etc., received as a result of making data available through Data.gov?

I would recommend this framework be included in the next version of the Data.gov CONOP so as to provide a way for everyone involved to a) measure progress towards the objectives of the OMB directive and b) provide a tool for facilitating the dialogue with federal agencies and Congress that will be required to make Data.gov a success.

28.06.2009

data sharing, Information sharing, JIEM, law enforcement, Law enforcement information sharing, LEIS, N-DEx, NIEM, Processes, Strategy

Remember the old Reese’s Peanut Butter Cups commercial? “You got chocolate on my peanut butter “…”No, you got peanut butter on my chocolate “…? Well, this is one of these stories…

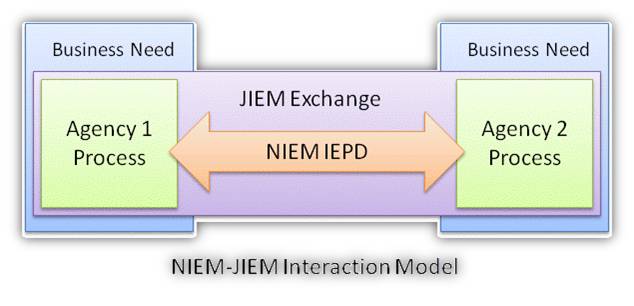

It’s no secret, the National Information Exchange Model (NIEM) is a huge success. Not only has it been embraced horizontally and vertically for law enforcement information sharing at all levels of government, but it is now spreading internationally. A check of the it.ojp.gov website lists more than 150 justice-related Information Exchange Package Documentation (IEPD) based on NIEM–it’s been adopted by N-DEX, ISE-SAR, NCIC, IJIS PMIX, NCSC, OLLEISN, and many other CAD and RMS projects.

For at least the last four years, Search.org has been maintaining the Justice Information Exchange Model (JIEM) developed by Search.org. JIEM documents more than 15,000 justice information exchanges across 9 justice processes, 75 justice events, that affect 27 different justice agencies.

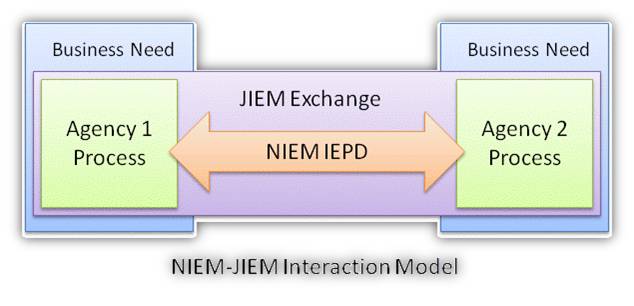

So if JIEM establishes the required information exchanges required in the conduct of justice system business activities, and NIEM defines the syntactic and semantic model for the data elements within those justice information exchanges…then…

Wouldn’t it make sense for JIEM exchanges to call-out specific NIEM IEPDs?

And vice-versa, wouldn’t it make sense for NIEM IEPDs to identify the specific JIEM exchanges they correspond to?

Here’s a diagram that illustrates this…

Let me know what you think..

r/Chuck

chuck@nowheretohide.org – www.nowheretohide.org

09.03.2009

data sharing, fusion center, Information sharing, intelligence center, law enforcement, privacy, Processes, security

Time Magazine just released “Fusion Centers: Giving Cops Too Much Information?” – another article in a long line of articles and papers published over the last few years by many organizations describing how fusion centers are a threat to our personal privacy. In the article, they quote the ACLU as saying that

“The lack of proper legal limits on the new fusion centers not only threatens to undermine fundamental American values, but also threatens to turn them into wasteful and misdirected bureaucracies that, like our federal security agencies before 9/11, won’t succeed in their ultimate mission of stopping terrorism and other crime”

While I disagree with their assertion that “legal limits” are the answer (we already have lots of laws governing the protection of personal privacy and civil liberties), I do think that more can be done by fusion center directors to prove to groups such as the ACLU that they are in-fact operating in a lawful and proper manner.

To help a fusion center director determine their level of lawful operation, I’ve prepared the following ten question quiz. This quiz is meant to be criterion based, meaning that ALL ten questions must be answered “yes” to pass the test; any “no” answer puts that fusion center at risk for criticism or legal action.

Fusion Center Privacy and Security Quiz

- Is every fusion center analyst and officer instructed to comply with that fusion center’s documented policy regarding what information can and cannot be collected, stored, and shared with other agencies?

- Does the fusion center employ a documented process to establish validated requirements for intelligence collection operations, based on documented public safety concerns?

- Does the fusion center document specific criminal predicate for every piece of intelligence information it collects and retains from open source, confidential informant, or public venues?

- Is collected intelligence marked to indicate source and content reliability of that information?

- Is all collected intelligence retained in a centralized system with robust capabilities for enforcing federal, state or municipal intelligence retention policies?

- Does that same system provide the means to control and document all disseminations of collected intelligence (electronic, voice, paper, fax, etc.)?

- Does the fusion center regularly review retained intelligence with the purpose of documenting reasons for continued retention or purging of outdated or unnecessary intelligence (as appropriate) per standing retention policies?

- Does the fusion center director provide hands-on executive oversight of the intelligence review process, to include establishment of approved intelligence retention criteria?

- Are there formally documented, and enforced consequences for any analyst or officer that violates standing fusion center intelligence collection or dissemination policies?

- Finally, does the fusion center Director actively promote transparency of its lawful operations to external stakeholders, privacy advocates, and community leaders?

Together, these ten points form a nice set of “Factors for Transparency” that any fusion center director can use to proactively demonstrate to groups like the ACLU that they are operating their fusion center in a lawful and proper manner.

As always, your thoughts and comments are welcomed…r/Chuck

02.01.2009

CJIS, data sharing, Evaluation, Information sharing, law enforcement, Law enforcement information sharing, LEIS, Performance Measures, Processes, public safety, SOA, Strategy, Technology, Uncategorized

Tom Peters liked to say “what gets measured gets done.” The Office of Management and Budget (OMB) took this advice to heart when they started the federal Performance Assessment Rating Tool (PART) (http://www.whitehouse.gov/omb/part/) to assess and improve federal program performance so that the Federal government can achieve better results. PART includes a set of criteria in the form of questions that helps an evaluator to identify a program’s strengths and weaknesses to inform funding and management decisions aimed at making the program more effective.

I think we can take a lesson from Tom and the OMB and begin using a formal framework for evaluating the level of implementation and real-world results of the many Law Enforcement Information Sharing projects around the nation. Not for any punitive purposes, but as a proactive way to ensure that the energy, resources, and political will continues long enough to see these projects achieve what their architects originally envisioned.

I would like to propose that the evaluation framework be based on six “Standards for Law Enforcement Information Sharing” that every LEIS project should strive to comply with; they include:

1. Active Executive Engagement in LEIS Governance and Decision-Making;

2. Robust Privacy and Security Policy and Active Compliance Oversight;

3. Public Safety Priorities Drive Utilization Through Full Integration into Daily Operations;

4. Access and Fusion of the Full Breadth and Depth of Regional Data (law enforcement related);

5. Wide Range of Technical Capabilities to Support Public Safety Business Processes; and

6. Stable Base of Sustainment Funding for Operational and Technical Infrastructure Support.

My next step is to develop scoring criteria for each of these standards; three to five per standard, something simple and easy for project managers and stakeholders to use as a tool to help get LEIS “done.”

I would like to what you think of these standards and if you would like to help me develop the evaluation tool itself…r/Chuck

Chuck Georgo

chuck@nowheretohide.org

www.nowheretohide.org

29.08.2007

Performance Measures, Processes, SOA, Strategy

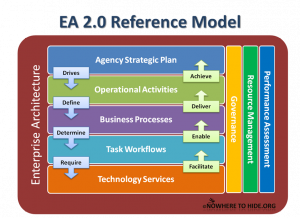

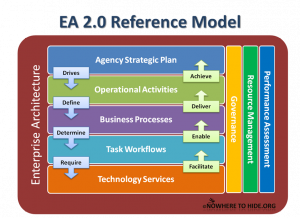

After some time thinking about my last post about EA being dead, I decided to resurrect it and marry it up with three other diciplines that I have been working in for the last 10 years; that being Strategic Management, Business Process Management and (now) Service Oriented Architecture. If you’re going to use the word “enterprise” in the context of architecture, then doesn’t it make sense to include the WHOLE enterprise?

So, the result of my thinking is shown below – I’m calling it EA v2.0. When you look at the diagram, the one thing to pay attention to is the relationships between the layers of the model, and not so much what’s in each layer. The fact is that fairly mature methodologies exist for every layer. This model is my attempt to build better understanding about the linkages BETWEEN the layers. In other words, there are a lot of models out there for conducting strategic planning, business process modeling, workflow management, and technology archtiecture; however, I have yet to find anything that explains in simple terms how all this crap ties together in a way that organization executives can understand.

So, take a look at my EA v2.0 reference model below and tell me what you think….r/Chuck

Scrum is a process framework that has been used to manage complex product development since the early 1990s. It’s a framework within which you can employ various processes and techniques for developing software products. The authors, Ken Schwaber and Jeff Sutherland, just relased an updated guide to implementing Scrum; you can get a copy of it by clicking here–> Scrum Guide 2011 ,or on the picture.

Scrum is a process framework that has been used to manage complex product development since the early 1990s. It’s a framework within which you can employ various processes and techniques for developing software products. The authors, Ken Schwaber and Jeff Sutherland, just relased an updated guide to implementing Scrum; you can get a copy of it by clicking here–> Scrum Guide 2011 ,or on the picture.